A long time ago, in a country far, far away(*), one Melvin Conway made the sociological observation that the structure of IT Solutions in an organisation followed the structure of the organisation. Frederick Brooks, in his seminal book The Mythical Man-Month, christened it Conway’s Law, and that name stuck. I think that Conway’s Law — and what is happening to it — is a sign that our ‘information revolution’ is experiencing a tipping point.

In short, what Conway observed was that if — for example — an organisation had separate accounting and reporting departments, it had separate accounting and reporting IT solutions. But if — for instance — it had a combined accounting-and-reporting department, it had a combined accounting-and-reporting IT solution. In other words: the structure of IT followed the structure of the organisation.

This was generally true 50 years ago. But it is changing now, and this change suggests we have reached a tipping point in the ongoing information revolution. Let me explain.

What have we done?

If you look at the last 50 years, we have added an enormous amount of ‘logical behaviour’ to our societies (and thus to the world).

Before computers, logical behaviour was extremely rare in the world. We humans were able to do some, but our power to do it is puny compared to computers. We are however (probably) unique in nature of actually being able to do that and it defines how our behavioural intelligence differs from that of other species in the world. At least partly as a result, we are the most successful complex organism on earth, and the one which has been able to colonise the most wide collection of niches, from cold to hot, dry to wet, low to high, etc..

So, it is not that strange that — when super fast machine logic appeared in the 40’s — we believed that it would enable us to make truly intelligent machines. After all, we believed (and most of us still do) that the essence of our intelligence is logic. That idea has — as far as I’m concerned — been pretty much debunked, whatever advances (both real and imagined) are being made in digital AI or analytics. You can read more about it in this article: Something is (still) rotten in Artificial Intelligence. But that is not the issue of this post.

The issue of this post is that — regardless of that logic being ‘intelligent’ or not — in roughly half a century, we have added an unprecedented and unbelievable volume of it to the world. And given the fundamental properties of such logic(**), this is having more and more of an effect on human society, and through human society the world.

One way a key property of logic shows itself is that rare complex logical landscapes (that is us, these days) are increasingly hard to change. Large transformations in/with IT are slow, costly, and often fail. Why? Why is it that instead of getting better and better at it, we seem to get slower and slower? Because, contrary to what is often said: change is not just speeding up, it is — in the reality of organisations — often also slowing down. Changing a core system in your landscape takes more time and money than building a large new building. Change in IT is hard. (***)

But what makes it so hard?

Three things make it hard: (1) the sheer volume of interconnected logic; (2) the limitations of us humans when working with logic — we’re better at frisbee; and (3) the brittleness of logic.

The brittleness of logic

To start with the latter, logic, for all its strong points, has one major Achilles’ heel: it is brittle. Logic is extremely sensitive to correct inputs. Where we humans can work very well with estimates and approximations, logical machinery can’t. One wrong bit and the whole thing may not work or can even come crashing down. A little broken often means completely broken. Or, if we move over to a security example: one small vulnerability and the criminal is `in’.

The complexity of dependencies between all those brittle parts is more than we humans can handle. And to top it off, although we are the best logicians of all species on earth, we humans are actually pretty bad at logic. We create that logic, but we cannot foresee every combination and we make errors. Lots of them.

And no, digital computers are not going to help us out on this one. Digital computers are in the ultimate end rule-based machines, even when the rules are hidden from view. Very short, the problem is that there are no rules to decide which rules to use. And while we can do some things to help us out here (e.g. statistics), the solutions are inefficient (e.g. look at the large energy cost of machine learning in particular) and remain brittle.

So, given that the brittleness of (machine) logic has been with us for quite a while, what do we normally do to manage the problems of using logic?

[Drum roll…]We add more logic!

E.g.:

- We add all kinds of abstractions: Object-orientation, Service oriented Architectures, frameworks, virtual machines instead of just physical machines;

- We add more of the same: for instance, we create high availability clusters of identical hardware behind ‘load balancers’ to counter the failure of a single server;

- We add additional logical behaviour: input/status checking code, backups, monitoring, event management, testing during change, analytics, the list goes on and on.

In other words: to address those troublesome effects of having huge amounts of interconnected, brittle logic, we add more (interconnected, brittle) logic. With its own additional brittleness of course. There is a whiff of a dead end here.

In the ‘digital enterprise’ only a (small) part of all that machine logic is in the end about the primary function such as ‘paying a pension’ or ‘selling a book’. Most of it is about (a) keeping the whole logical edifice from falling apart or down in one way or another, and (b) remaining able to change it.

In control?

Summarising: the volume of brittle machine logic in the world has ballooned. And the whole landscape of interdependent logical behaviour is too complex for us humans to be completely in control of it. For years we have seen larger and larger top-down waterfall-like approaches (of which orthodox enterprise architecture is a part) to ‘change’ in digital landscapes, but that approach is now dying.

What we see happening instead are bottom-up approaches to address the problem, such as Agile/DevOps. This is not entirely new. Software engineering approaches such as object-orientation (which was supposed to fix the same problem for us at the application level in the 1980’s) or service-oriented architecture (which was supposed to fix the same problem for us at the application-landscape level in the 1990’s) have long shown a tendency towards bottom-up abstraction as a way to handle interconnected brittle complexity.

And now, these software-engineering born, bottom-up approaches have reached the level of the human organisation. The volume of IT — of machine logic — has become so large, and the inertia (‘resistance to change’) of all that interconnected brittleness has become so large that we humans have started to adapt to the logic instead of trying to adapt the logic to us. After all, we’re the ones with flexible and robust behaviour.

Case in point: we increasingly organise ourselves in Agile/DevOps teams around IT solutions with ‘Product Owners’. We then combine various product teams into organisational entities called ‘Value Streams’ (e.g. in a leading agile methode called SAFe). In other words, instead of having IT solutions reflect the organisation (Conway’s Law), we now more and more have human organisation reflect the structure of IT.

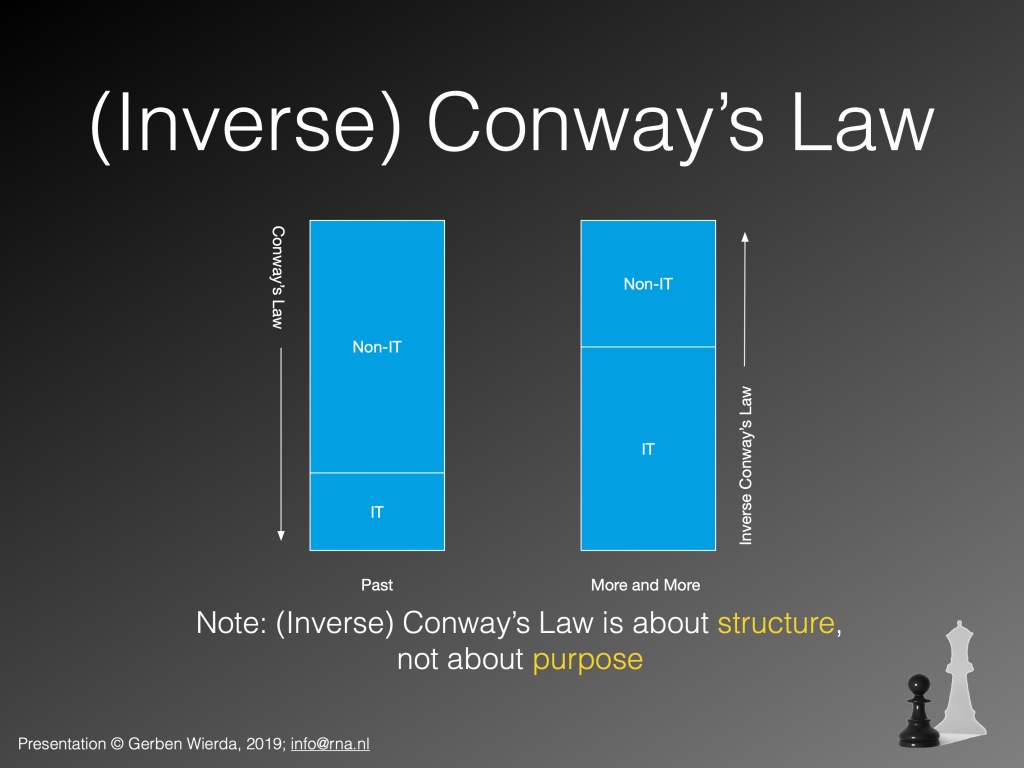

In a slide from my workshop:

So, whereas it used to be “business purpose leads to business structure leads to IT structure”, more and more we are seeing “business purpose leads to IT structure leads to business structure”. Inverse Conway’s Law.

This is what the buzzword-phrases ‘digital enterprise’ or ‘digital transformation’ stand for: it is us (flexible) humans adapting to the weight and inertia of the massive amounts of (inflexible) brittle machine logic that we have brought into the world. The IT does not just adapt to our wishes, we adapt to the demands of using all that IT. A true tipping point. And the switch from Conway’s Law to Inverse Conway’s Law illustrates it.

What next?

What we clearly haven’t adapted to yet is the effect the new ‘primacy of logical behaviour’ is having on our social structures. As with the industrial revolution in the end spawning political movements to curb its excesses, something like that still has to happen with respect to the information revolution. Three questions are in my mind about that. (1): how bad will the excesses (already visible) become before we act? (2): will we be able to act (sensibly) given our human limitations? And (3): will there be enough time to curb the excesses before the excesses enable or result in irreparable damage?

[Update 15/Feb/2020] After reading several comments, I feel the need for a small update to correct some interpretations of my writings I have seen people make.The first addition has to do with the role of all that IT, of the ‘information inertia’ effect. I do not argue that IT is driving our world instead of we humans ourselves. We are still driving it and making the choices. I think one could best compare the changed situation to skiing a slalom with a weight attached by a short string to ones waist. The speed has gone up because of the weight. But the inertia of the weight (all that IT) makes it harder to be agile. The weight has not an active ‘will’, though. The human now has to manipulate the weight (which is hard) in order to do the slalom.

Expanding the notion:

- Conway’s Law: Business purpose (human will) leads to business structure (with human behaviour) leads to IT (with machine logic behaviour);

- Inverse Conway’s Law: Business purpose (human will) leads to IT structure (with machine logic behaviour) leads to business structure (with human behaviour).

What I am describing, therefore, is not the (IT) tail wagging the (business) dog, just as the weight in the slalom example is not doing the slalom. But the human doing the slalom has to adapt to the inertia of large complex IT, and changing direction thus becomes harder.

Secondly, I use the notion of Value Stream as an IT-driven business structure. Originally, the notion of Value Stream is much older and predates massive IT. But in SAFe, it is kind of a roll-up of (IT) product teams, so more bottom-up. Which is logical because the ‘value’ in Value Stream comes more and more from what IT does. [/Update 15/Feb.2020]

(*) 1968, the US (paraphrasing this one, of course)

(**) There are different forms of logic, such as for instance quantum logic. The logic we have added to the world is the classical kind. In fact, digital computers are machines that execute classical logic. There are attempts to make machines based on different kinds of logic, e.g. quantum computing. That quantum logic (as executed by quantum computers) is not the logical (heh) answer to the limitations of classical logic you can read here: To be and not to be — is that the answer?

(***) The interconnected whole has a form of inertia: it resists change. So you can feel a bit less guilty about projects being late and expensive. Some of it is not your fault. Some of it is just a fact of the world. Some of it is ‘information inertia’. Of course, you can still make matters much worse than necessary. E.g. by not cleaning up.

PS. Regarding Enterprise Architecture: Some argue that the new bottom-up Agile/DevOps ways of working are only ways to manage the ‘fast change’ on the ‘outside rim’ of an enterprise. The IT core is to be seen as a classic efficiency-driven stable foundation. E.g. Gartner’s ‘Mode1/Mode2’ or Jeanne Ross’s ‘Designed for Digital’. I think Gartner’s Mode1/Mode2 is nonsense because the same demands hold for Mode2 as for Mode1 (e.g. security, continuity, integrity, etc.). There is no real separation between these elements in the landscape, they are all (loosely) coupled in the end and these couplings, loose or not, add to the total inertia and complexity. Such ‘dual’ perspectives may only show the authors of them being halfway between changing from one perspective to another. Time will tell, but I think the change merits a completely different way of looking at enterprise architecture and what architecture is.

The original of this article can be found here.

One Comment